The Adapter Diaries – Oracle Adapter – 2

Having successfully started with the Oracle adapter and dumped the entire table out to a file, I decided to look into the mechanisms for getting the data out for the official assignment. I’d like to share what i found. As i mentioned in the previous article, my first forays into the adapter were still in the more simple options and no rocket science involved here, so if you are still interested, read on.

Choosing The Notification Mechanism

I picked the TblChangedEvent first. One of the suppliers tables is a BusinessDocument_Header table where entries are made whenever these documents are created or updated. Further details about the contents of the business document are available in a mass of other tables, but the main notification source is this Header table.

Now going with just the name of the event, I was under the assumption that somehow the adapter would know when a change occurred in the table and would be able to notify me. So, using the same receive port that I previously created, I added another receive location and in the location I set a table changed event for the Header table. For the first time round of course all the rows were output into the file. I didnt mind that. I changed some rows in the table and waited. After the polling interval the same thing happened again – all the rows were dumped, not just the ones i had changed.

At first I was rather disappointed with the outcome but then on thinking about it bit more, it all seems perfectly logical.In a generic comprehensive adapter, the transmitting system cannot be expected to know what constitutes a change (or more specifically, what is the users definition of a change). Is it new insert ? is it an update to a specific column or set of columns ? This could be possible in a custom adapter written expressly for this purpose but not in a generic one.

Before looking at other options , i looked at the “Delete After Poll” setting for this TblChangeEvent. This works fine. As soon as the table data was returned to me the entire contents were deleted and the next time I added a row it got returned immediately and the contents truncated. However, while interesting to play with on a disposable dev schema , this option is not feasible to apply on the main Header table because that’s a core part of the application and there could be other components subscribing to that data.

The next option I tried was to use NativeSQL with polling and post-polling statements. Since I have a dev version of the database I added another column “HAS POLLED” defined as a CHAR(1) which was set to N by default and used in the Poll statement and the Post-Poll set the column to Y. This works as advertised. It was also interesting to read some details about polling in this MSDN article “Poll, Post Poll, and TableChangeEvent” .

A useful note about the transactional feature in that article is “It is important to note that the execution of the poll statement and post poll statement is performed within a transaction of serializable isolation level. Records or changes that were not visible when executing the poll statement are also not visible when the post poll statement executes. This may reduce throughput on some systems, but it guarantees that the above-mentioned example only affects the data that was sent to BizTalk Server with the poll statement.

The article also goes on to illustrate examples of a poll and post-poll statement for a sample table. I was quite impressed with this because from memory (of a dim and distant time) when i worked with the SQL adapter, we had to roll our own transactional notification using stored procs etc (i didnt get round to using updategrams) to update the columns that had been sent for consumption.

This option works when you have control over the schema of the table (or you are working on your own table) , but in my case, the supplier wont be too happy about me adding columns to their tables, so this option – to poll the base table using a flag attached to it was out of the question.

This left me with just two options

-

A trigger on the main table copying data to a target table which can then be utilized in either fashion (“tblchange with delete after poll” or “poll & post-poll statements”). This is still problematic because the supplier might not like that option as extra triggers could interfere with business logic or adversely affect performance.

-

To leverage timestamp columns and external “poll tables” as Richard Seroter pointed out in the feedback section to his Walkthrough article. (To summarise that : He had another table with just one entry in it – the last poll time and used that as a control element when polling the main table and set this value in the post poll event) This seems a simple enough option and on testing it out it works brilliantly. This has the advantage that the supplier wont mind me adding another table and it wont affect their upgrade scripts etc.

So this “External Poll Control” pattern is the least invasive of my options. At this point I still dont have the need for any stored procs (but it may still be required)

Lessons & Considerations

Some of the things to consider when choosing your notification mechanism are

(1) Schema Control and support: if you dont control your schema obviously your options are limited, but there are creative ways around it (like the external table). Of course, if the system is completely maintained by a thirdparty and the DBA wont let you touch the schema, then this aint gonna work. You may have to resort to the TblChangeEvent to give you the whole data set and then build a custom “changeset” identification mechanism in a database that you control.

(2) Triggers are not always good: Even if you do control the schema, triggers may not be a good thing to use (consider performance and data integrity. Some systems may have more than one trigger on a table and there may be some sequence of execution that could trip you up.Its no use trying to tell them things like “the business logic shouldnt be in the database”. Sometimes it has to be, but even if it doesnt, its there. Live with it !!!

(3) Possible Data Loss: In a heavy load situation, even if you could chuck the entire table at Biztalk (via TblChange) and use a “delete after poll” event, whats the guarantee that the delete statement wont kill off transactions that were added in the little window that stuff was sent to Biztalk ? (ie) if you had 100 notification records and sent them for consumption, before the delete gets fired, maybe 25 more would have been added. Will the delete knock them off also before they can get sent in the next round? Or is this guaranteed not to happen? (If you know the adapter well enough to answer this, pl let me know. I could cook up a test scenario but it would save time if i was given the answer here.

(4) Size of the dataset & complexity of schema: If its possible, go for the minimum data needed to notify and then use a content enrichment step to get the rest of the data through other published interfaces. For example, in our scenario, the business document data is spread out across many tables and a lot of it is contained in generic “name-value” pairs. The SQL to build the whole document would be horrendous – thats assuming we could spare the time to write it all. Fortunately the supplier has provided a webservice that retrieves the business document for us so all we need is to get the doc reference in the notification.

Of course, we have to consider that if we get 1000’s of notifications then calling the webservice that many times is not good either and some throttling would be needed. Its a java based system so i can’t opt to do an inline call as i might attempt if i had a .NET target. This area is still up for grabs and i’m trying to gather some stats on volumes to know if the webservice is still the best choice. I’ll keep you posted.

If there are other things that need to be considered and you’d like to contribute to this list, please let me know.

Whats next?

Im currently still investigating how much work is involved in building the “minimum data set” that will allow me to peacefully content enrich by using the web service. I dont want a flood of notifications about business docs i’m not interested in so there is some data available that will allow me to filter the types of documents. I also dont want to hardcode that filter into the code so theres some work to be done in the area of parameterising the filter and making it possible for the system to be extended to get more documents in future without rewriting the code.

I’ve talked a little bit about the Content Enricher already. I was intending that to be my next post, but it seemed better to put it all here. Theres more to say on that subject so stay tuned. Depending on the availability of time I’m going to put more R&D into the Oracle adapter. The External Poll route seems sufficient for now, but i just have this feeling that it wont be as easy as this.

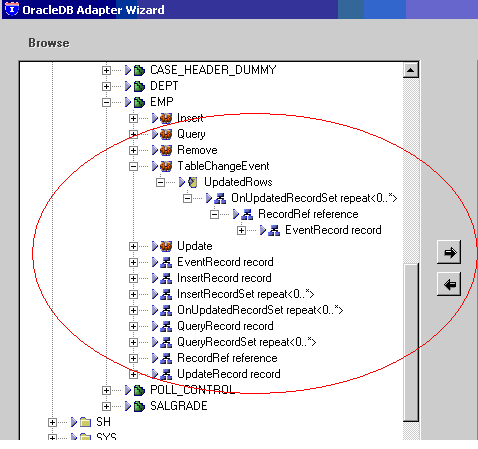

There seems to be a wealth of options in the Oracle adapter there and I don’t think even the MSDN documentation goes into all of them. Look at the following screenshot when you browse through the schema (on clicking Manage Events)

I thought all the highlighted things may be further filters on the events to give us more control over the data but when I tried to select any of them it threw up this odd little message box saying “The selected item can not be used as a receive”.

I think that’s rather odd. What’s the point of showing them if they cant be used at all? Maybe that’s for the SEND side? I’ll need to take a look but if anyone has some insight on that and would like to share it, please let me know.

I think that’s rather odd. What’s the point of showing them if they cant be used at all? Maybe that’s for the SEND side? I’ll need to take a look but if anyone has some insight on that and would like to share it, please let me know.

Until next time.. happy Biztalking !!

Great article. I too have been perplexed by the fact that the supplied Oracle adapter cannot monitor a new transaction in a table and write that specifc record(s) to an XML file. I was told that it could and have invested a lot of time and research this thinking that it was me that could not learn this software. Very frustrating to find out that it cannot (w/o apprarently coding you own custom adapter, but still cannot think of a development wayt to do this). However, I am also very relieved to know that other are having the same issues. I will bookmark your site.

thanks!

–scotta

J. Scott Adams

April 1, 2008 at 5:15 PM

Scott,

Thanks for the feedback. IMO, I think they are missing just one thing – a configurable way for the user to indicate what is a change. I’m not an adapter expert, but If i were to write this functionality , i would consider using a custom affiliate application in SSO to define and manage this (ie) choose the table and the columns and the “trigger” event and if its a time based event then Richards external poll table technique could be added on to that. But it would start to resemble a hybrid between this event and a nativeSQL polling mechanism, Maybe i should try and write a sample adapter sometime!!!

Then again, perhaps there is some undocumented feature in this Oracle adapter that lets you customize the TblChange event.

Cheers

Benjy

santoshbenjamin

April 1, 2008 at 11:26 PM

I feel your pain with the polling part 😉

Using the poll statement and post poll statement to update some status works for many cases, however I found duplicate data being polled in 2 cases :

– In the initial load, receive location tends to start up faster than the send port, the receive location may poll again in the next interval before the send port finish the initialization.

– I have a situation where i need to poll data every second, the x poll statement may be executed first before x-1 post poll statement is executed.

So in the end, I found a work around to avoid this, use oracle function to select and update the status in the select statement, this eliminates the dependency of the post poll statement.

I have to say that there are pros and cons with this work around, but i think it really depends on the situation that we have 😉

I hope you don’t mind if i post a link in here 🙂

http://bembengarifin-tech.blogspot.com/2008/04/biztalk-2006-oracle-adapter-odbc.html

Thanks.

Bembeng Arifin

May 22, 2008 at 3:19 PM

Hi ,

Thanks for the feedback. The duplicate data issue seems rather significant. I also like the idea of the oracle function to avoid the post-poll. I’ll try this out in my project. I have bookmarked your post as well..

cheers

benjy

santoshbenjamin

May 22, 2008 at 11:10 PM

[…] Arifin commented on my second post in this series calling out something he had found when working with polling and i […]

The Adapter Diaries - Oracle Adapter - 4 « Santosh Benjamin’s Weblog

May 27, 2008 at 11:14 PM