Archive for the ‘Biztalk Adapters’ Category

MockingBird Futures – Mock BizTalk Adapters

MockingBird v2 has almost reached RTM. Just a few more days to make some final touches and it will be ready. The team has been discussing how to take it further and one thing that’s been on my mind for a long time is the topic of mock BizTalk adapters. What I would like to do is write some adapters with the WCF LOB Adapter SDK that link up with MockingBird’s simulation engine so we can then simulate various protocols such as SQL, Oracle, MSMQ, MQSeries etc.

The first question that anyone would ask me is “Why? “. Currently MockingBird works decently as a receiver from BizTalk in one way and solicit response mode. (It also supports duplex channels). If you have a one way message from BizTalk or a solicit response, then all you need is to replace the URL of the endpoint with the MB url and with the right config, the system will send back the messages you were expecting. So, why do we need to mock an adapter when we can simply avail of the WCF-Custom adapter?

While the first thing that comes to mind is that MB only handles the send side and does nothing on the receive side, i do think that there are many other reasons to go down the adapter route (on both the send and receive sides)

For the send side

- Adapters will allow us to manipulate & use context properties. For situations where transport level correlation is being used (MQSeries, MSMQ etc), the adapter will let us force copy correlation identifiers to the response. Without this, we would need to have a queue listener that understood the MQ/MSMQ protocols and set properties accordingly.

- Adapters will allow us to simulate transactional behaviour.

- Minimizes the ‘intrusion footprint’ of the tool. Currently even if you were targeting SQL you would need to switch to WCF Custom and make a lot more binding changes. If we could adhere to the SQL adapter properties, it would reduce the amount of work. In fact all we should require is a change of url (eg: mbsql:// )

- If an application interface doesn’t support web services, then piggy-backing on WCF LOB adapters will allow us to mock that system (assuming we are wrapping that system with the WCF LOB Adapter, we could potentially leverage that adapter to direct messages into mockingbird). So if there is a SQL/Oracle database, because we can connect to that via WCF-SQL , WCF-Oracle etc, we can just add the mock flavors of the same otherwise we would have to mock the entire SQL/Oracle protocols which is not feasible.

For the receive side

- Although we can always create a test receive location with the file adapter, this is limited to ‘unit tests’. We cannot simulate other transport protocols (for example, polling sql. Also with queues (MQ/MSMQ) we would need an app that could send to the queue to activate the receive location.

- We could extend an adapter to use the ‘Submit Direct’SDK sample to send messages into BizTalk directly and configure this.

- The context properties argument also applies here. A lot can be set artificially on the message. Similarly transactional behaviour could also be applied.

So, what about other current tools in this general area ?

- Some of this kind of testing can be done with BizUnit (example : sending messages to a queue or reading from a queue). BizUnit could also be extended (example : a correlation aware queue listener) but BizUnit’s focus is on distinct steps that verify behaviour rather than on causing/forcing some behaviour which we can do via the adapters. The adapter approach also keeps this testing ‘within the boundary of Biztalk’ . while BizUnit will complement this nicely from the outside.

- LoadGen can also help on the receive side. Again, LoadGen’s focus is on load/stress testing rather than functional behaviour. I think it may be possible to use LoadGen with Mockingbird (maybe as part of these adapters).

- BizMock is another tool that is in similar territory. But having discussed MockingBird with Pierre Milet Llobet, the author of BizMock, I don’t see any overlap here. BizMock is focussed on providing a fluent interface to help with a TDD approach to BizTalk testing and on providing a mock adapter that is used within that test scope, but MockingBird’s adapters will be ‘proper’ infrastructure and integration test faciliators rather than a unit test tool.

Okay, so these are my thoughts on extending MockingBird into the arena of mock BizTalk adapters and I would really like to get feedback from the BizTalk community on this. What do you think of the idea? If you have some experience with the WCF LOB Adapter SDK (which I’ve only played with briefly) perhaps there’s some suggestions you can make or tips/gotchas you can make us aware of ? Is this an area you could get involved with (not necessarily have to contribute code, there are bandwidth constraints on all of us, but potentially send us suggestions, design ideas , maybe be an early tester etc?) .

Let me know. All feedback would be greatly appreciated.

BizTalk, WCF and the Bad Gateway

We ran into an interesting problem a couple of days ago and I decided it would be worth posting the solution in case anyone else runs into it.

The scenario involves a Windows Service communicating with a BizTalk WCF endpoint which is hosted using WCF-CustomIsolated and some fairly complex custom bindings, notably using X.509 Mutual Certificates. This is the second half of the communication, the first being where BizTalk sends a request to a vanilla WCF endpoint and gets an ACK, the service does some processing and the WinService then does a “call back” with the “real response” / outcome of the processing. Both communication routes are secured via the same bindings. We didnt have any trouble with the transmission from BizTalk to the WCF service but the WinService initiated communication always failed with a HTTP 502 : Bad Gateway message. This had us stumped for a while as some articles we read seemed to suggest that there was a problem on the server. Now if you have looked into custom bindings and certs in BizTalk, you will know that there is some really heavy duty and scary stuff there so we wondered whether the way we had setup the certs and credentials info was playing havoc with the communication.

So I used the trusty WSCF.blue and mocked up another WCF service to mimic the BizTalk endpoint replete with the bindings (no, WSCF Blue doesnt support custom bindings yet, but will sometime soon) and swapped the URI in the WinService and it worked just fine. Still puzzled, I then created a console app to mimic the winservice and it was able to communicate with both my mock BizTalk service as well as the real endpoint. Curiouser and curiouser. At that time the only difference was in the App Pool between the BizTalk service and mock service, but i used the same app pool and still the problem only manifested between the Winservice and Biztalk.

Anyway, with some guidance from an expert colleague (who, unfortunately wasnt around when I was writing those mock clients and services) It turned out that the problem was with a setting “UseDefaultWebProxy” in the Win Service. The difference between the WinService and the console client was that the console was running under my credentials and in the IE settings I had turned off the proxy for the specified URL so although I didnt specify the setting as “false” in the client it automatically picked up my settings. But the WinService was running under another user account, and so the system didnt care about my proxy settings and we had to explicitly set the value to “false”.

That was it. A little setting buried deep in the bindings that caused us the grief. After sorting this out I stumbled across this post from Kenny Wolf which points to the offending setting. Wish I had found that earlier, but then I wouldnt have realized that aspect of the console client where it picked those settings. So, hope this helps someone in a similar situation.

Introducing ‘Mockingbird’

In my previous post, I briefly mentioned a tool that helped with the testing of web-service consumers. I’m happy to announce that this is now publicly available on CodePlex under the moniker “MockingBird’. Here are some details about the tool – where it came from, how it helps and where it is going. (Most of this info is on the CodePlex space, but i’ll reproduce it anyway).

The Scenarios for MockingBird

(1) Imagine you are given the WSDL for a third-party web-service but no functioning system is available yet (it may be a brand new service or perhaps dev/site licenses are being negotiated). You need to get on with development right now. What do you code against? You maybe a ‘TDD & Mock Objects’ savvy dev which will help in many cases, but what if you ‘don’t do’ TDD & Mocks? Or it may be that you are maintaining/enhancing an existing system that wasn’t coded against interfaces etc. Or what if you are a BizTalk developer? You cannot mock/inject dependencies into your orchestrations and other components (well, except for pipeline components, but thats another story)!!

(2) Next, imagine that you are setting up a build server and multiple environments (DEV, TEST, UAT etc). But now the vendor says you can only have one license for their software. Now how do you run DEV, TEST, UAT in parallel with different data sets? Or you may have more than one license, but what if that service has maintenance schedules that clash with your build? Your build server is then completely exposed to something you don’t control.

I’ve expanded on these scenarios in the tool documentation here, but essentially these are the two main scenarios that MockingBird targets.

The Origins of MockingBird

MockingBird started life as MockWeb an internal tool that my former colleagues (Senthil Sai and Will Struthers) and I developed. All credit must go to them as Senthil first came up with the concept and Will then contributed a lot to the code-base.

It started when we got rather fed up with having to set up multiple instances of a third party service and build data-sets just to help with testing our BizTalk orchestrations reliably. It grew rapidly and organically. While we felt that this concept and tool would be useful to the .NET dev community at large (not just BizTalk teams), the structure of the codebase (at the time) would not lend itself to easy extension and needed to be refactored before making it publicly available. Its taken a while , but now I’ve completely rewritten MockWeb and as I have mentioned in the roadmap, I intend this to go beyond HTTP Web Services and hence the new name.

Whats with the name – ‘MockingBird’ ? Sounds daft !!

Ok, so its not a tool that sits there and laughs at you when you try to test !! 🙂 . Thats not what the name is intended to convey. Wikipedia says that “They (mockingbirds) are best known for the habit of some species mimicking the songs of insect and amphibian sounds as well as other bird songs,[1] often loudly and in rapid succession” .

So the intent is to mimic web-services and I quite like the name ‘MockingBird anyway!!

The current implementation

There are two main elements to the current implementation

- An ASP.NET HttpHandler which is configured to return pre-set responses

- A WinForms GUI to setup the handler and associated configuration for a given WSDL.

The Roadmap

- The first thing I intend to do is revise the implementation to be fully WCF based as i want to be able to intercept calls irrespective of transport protocol. This is 0ne of the reasons i rewrote the code-base and i hope there wont be too much churn as i extend it

- The second thing is to make it a sort of platform to test webservices, generate BizUnit test steps, maybe C# test fixtures, possibly to code-gen Biztalk artifacts from given WSDLs and schemas.

- Thirdly to take it into the realm of mock Biztalk adapters. WCF is key to that. I envisage this as being a pair of custom WCF send and receive adapters that can dynamically be configured.

- Beyond that, lets see 🙂 .

So, check it out. There’s an alpha release currently available and your feedback will be appreciated. If you have any thoughts on how the mock Biztalk adapters could be done, I will be glad to hear and of course, if you want to join the project, you will be welcome.

The Adapter Diaries – Oracle Adapter – 4

“Pragma” killed the Post-Poll

Bembeng Arifin commented on my second post in this series calling out something he had found when working with polling and i decided to follow up on this and in my opinion, the technique is so simple, its absolutely brilliant!!

Basically, to avoid some of the problems with duplicate data pickup when using NativeSQL with Poll and PostPoll, Bembeng had used a function in the select statement which automatically did the postpoll statement inside an autonomous transaction (PRAGMA AUTONOMOUS_TRANSACTION – hence the title of this post 🙂 ).

Now I had taken an instant dislike to the NativeSQL when i used it earlier , particularly because i didnt fancy hardcoding a map with index functoids. (I think it may be possible to write a nifty little generic parser for this and maybe will try my hand at it sometime, but dont have enough time for that now). I was happy with the schema provided by the TableChangeEvent so i needed a compromise. I decided to use a view that brought back all the data from the main table where the timestamp was greater than the value in the poll_control table. My aim was that as soon as i got the data successfully i would call an update statement on the poll_control table.

My data comes in through a port map and then gets debatched by an orch (calling a pipeline ) and then the normalized ‘event’ documents get processed by another set of orchs. With this disconnected model it was rather difficult to spot where to update the poll control table because we dont want too much time to lapse such that we lose records (that arrived just after the poll). I tried to create a send port subscribing to the MessageType of the event message, but calling the UPDATE statement didnt work. I got some “object reference not set” type of errors. I guess i havent really understood how to use the UPDATE statement yet.

So, the dilemma was , should i go back to nativeSQL and use Bembengs approach or should i stick it out with the UPDATE? And then I had an epiphany : Why not both together? (:-) terribly dramatic aren’t I?). The solution then was to use the function inside the view. The view code is something like

“SELECT * FROM TABLE XYZ WHERE CREATED_DATE > (SELECT GetLastPollTime FROM DUAL)”.

“GetLastPollTime” being the function of course. This works very well. Everytime the tablechangedevent is executed, the view is called which in turn executes the function. Bembeng provides a sample of the function code in the article.

I’m really chuffed with this discovery (and i learned a fancy new thing in Oracle too – PRAGMA directives – woohoo!!). Thank you Bembeng!

A Rant about embedded ‘connection strings’

Whilst the Poll and Post-Poll are a nice feature of the Oracle adapter over the SQL adapter (except of course for the NativeSQL and the frustrations with the oddly named TableChangeEvent that i moaned about earlier), one thing i absolutely detest about the adapter is the way it embeds the connection data in the targetNamespace. (OracleDB://SERVICE_OR_DSN_NAME/SCHEMA_OWNER/TABLES/TABLE_NAME).

Bah! Humbug!. What if you have a different DSN /SERVICE (as most people do) for development and production (and other environments in between such as TEST, UAT and so on). Do you have different schemas? Perish the thought! Maintaining them would be a nightmare.

The approach which I have currently taken is to create a fairly generic service name like ‘CRMSERVICE’ in my Oracle TNSNAMES. That internally maps to the development instance. I also created a System DSN with the same name ‘CRMSERVICE’. Fortunately, the schema owner is the same in Dev and Live, otherwise i would have had to ask the DBA to create the same schema owner name in all environments. Now when i generate the schemas it uses CRMSERVICE /SCHEMAOWNER so when i move to a new environment i can just change the TNSNAMES.ORA file. (Thats the theory anyway – i shall know in a couple of days if that works out or not – currently my local connections and the DEV connections point to the same oracle instance, but not so when we move to TEST).

I really appreciated the SQL adapters approach where it let you create any namespace so you could be creative and just change the connection parameters in the binding file.

Anyway, I guess using SSO (instead of embedded credentials) in conjunction with this generic service name approach would give equal flexibility.

So, tell me, how do you handle this issue with the adapter? does the approach i am using make sense? any suggestions to make it better?

The Adapter Diaries – Oracle Adapter – 2

Having successfully started with the Oracle adapter and dumped the entire table out to a file, I decided to look into the mechanisms for getting the data out for the official assignment. I’d like to share what i found. As i mentioned in the previous article, my first forays into the adapter were still in the more simple options and no rocket science involved here, so if you are still interested, read on.

Choosing The Notification Mechanism

I picked the TblChangedEvent first. One of the suppliers tables is a BusinessDocument_Header table where entries are made whenever these documents are created or updated. Further details about the contents of the business document are available in a mass of other tables, but the main notification source is this Header table.

Now going with just the name of the event, I was under the assumption that somehow the adapter would know when a change occurred in the table and would be able to notify me. So, using the same receive port that I previously created, I added another receive location and in the location I set a table changed event for the Header table. For the first time round of course all the rows were output into the file. I didnt mind that. I changed some rows in the table and waited. After the polling interval the same thing happened again – all the rows were dumped, not just the ones i had changed.

At first I was rather disappointed with the outcome but then on thinking about it bit more, it all seems perfectly logical.In a generic comprehensive adapter, the transmitting system cannot be expected to know what constitutes a change (or more specifically, what is the users definition of a change). Is it new insert ? is it an update to a specific column or set of columns ? This could be possible in a custom adapter written expressly for this purpose but not in a generic one.

Before looking at other options , i looked at the “Delete After Poll” setting for this TblChangeEvent. This works fine. As soon as the table data was returned to me the entire contents were deleted and the next time I added a row it got returned immediately and the contents truncated. However, while interesting to play with on a disposable dev schema , this option is not feasible to apply on the main Header table because that’s a core part of the application and there could be other components subscribing to that data.

The next option I tried was to use NativeSQL with polling and post-polling statements. Since I have a dev version of the database I added another column “HAS POLLED” defined as a CHAR(1) which was set to N by default and used in the Poll statement and the Post-Poll set the column to Y. This works as advertised. It was also interesting to read some details about polling in this MSDN article “Poll, Post Poll, and TableChangeEvent” .

A useful note about the transactional feature in that article is “It is important to note that the execution of the poll statement and post poll statement is performed within a transaction of serializable isolation level. Records or changes that were not visible when executing the poll statement are also not visible when the post poll statement executes. This may reduce throughput on some systems, but it guarantees that the above-mentioned example only affects the data that was sent to BizTalk Server with the poll statement.

The article also goes on to illustrate examples of a poll and post-poll statement for a sample table. I was quite impressed with this because from memory (of a dim and distant time) when i worked with the SQL adapter, we had to roll our own transactional notification using stored procs etc (i didnt get round to using updategrams) to update the columns that had been sent for consumption.

This option works when you have control over the schema of the table (or you are working on your own table) , but in my case, the supplier wont be too happy about me adding columns to their tables, so this option – to poll the base table using a flag attached to it was out of the question.

This left me with just two options

-

A trigger on the main table copying data to a target table which can then be utilized in either fashion (“tblchange with delete after poll” or “poll & post-poll statements”). This is still problematic because the supplier might not like that option as extra triggers could interfere with business logic or adversely affect performance.

-

To leverage timestamp columns and external “poll tables” as Richard Seroter pointed out in the feedback section to his Walkthrough article. (To summarise that : He had another table with just one entry in it – the last poll time and used that as a control element when polling the main table and set this value in the post poll event) This seems a simple enough option and on testing it out it works brilliantly. This has the advantage that the supplier wont mind me adding another table and it wont affect their upgrade scripts etc.

So this “External Poll Control” pattern is the least invasive of my options. At this point I still dont have the need for any stored procs (but it may still be required)

Lessons & Considerations

Some of the things to consider when choosing your notification mechanism are

(1) Schema Control and support: if you dont control your schema obviously your options are limited, but there are creative ways around it (like the external table). Of course, if the system is completely maintained by a thirdparty and the DBA wont let you touch the schema, then this aint gonna work. You may have to resort to the TblChangeEvent to give you the whole data set and then build a custom “changeset” identification mechanism in a database that you control.

(2) Triggers are not always good: Even if you do control the schema, triggers may not be a good thing to use (consider performance and data integrity. Some systems may have more than one trigger on a table and there may be some sequence of execution that could trip you up.Its no use trying to tell them things like “the business logic shouldnt be in the database”. Sometimes it has to be, but even if it doesnt, its there. Live with it !!!

(3) Possible Data Loss: In a heavy load situation, even if you could chuck the entire table at Biztalk (via TblChange) and use a “delete after poll” event, whats the guarantee that the delete statement wont kill off transactions that were added in the little window that stuff was sent to Biztalk ? (ie) if you had 100 notification records and sent them for consumption, before the delete gets fired, maybe 25 more would have been added. Will the delete knock them off also before they can get sent in the next round? Or is this guaranteed not to happen? (If you know the adapter well enough to answer this, pl let me know. I could cook up a test scenario but it would save time if i was given the answer here.

(4) Size of the dataset & complexity of schema: If its possible, go for the minimum data needed to notify and then use a content enrichment step to get the rest of the data through other published interfaces. For example, in our scenario, the business document data is spread out across many tables and a lot of it is contained in generic “name-value” pairs. The SQL to build the whole document would be horrendous – thats assuming we could spare the time to write it all. Fortunately the supplier has provided a webservice that retrieves the business document for us so all we need is to get the doc reference in the notification.

Of course, we have to consider that if we get 1000’s of notifications then calling the webservice that many times is not good either and some throttling would be needed. Its a java based system so i can’t opt to do an inline call as i might attempt if i had a .NET target. This area is still up for grabs and i’m trying to gather some stats on volumes to know if the webservice is still the best choice. I’ll keep you posted.

If there are other things that need to be considered and you’d like to contribute to this list, please let me know.

Whats next?

Im currently still investigating how much work is involved in building the “minimum data set” that will allow me to peacefully content enrich by using the web service. I dont want a flood of notifications about business docs i’m not interested in so there is some data available that will allow me to filter the types of documents. I also dont want to hardcode that filter into the code so theres some work to be done in the area of parameterising the filter and making it possible for the system to be extended to get more documents in future without rewriting the code.

I’ve talked a little bit about the Content Enricher already. I was intending that to be my next post, but it seemed better to put it all here. Theres more to say on that subject so stay tuned. Depending on the availability of time I’m going to put more R&D into the Oracle adapter. The External Poll route seems sufficient for now, but i just have this feeling that it wont be as easy as this.

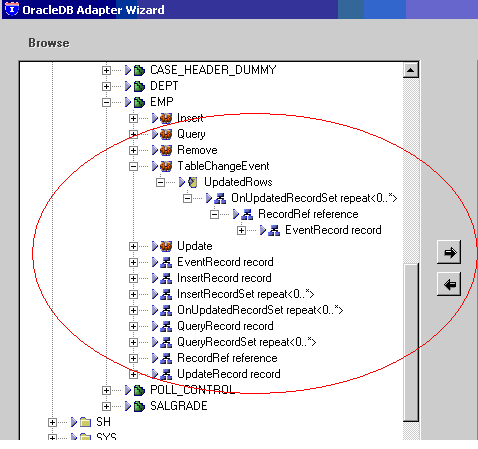

There seems to be a wealth of options in the Oracle adapter there and I don’t think even the MSDN documentation goes into all of them. Look at the following screenshot when you browse through the schema (on clicking Manage Events)

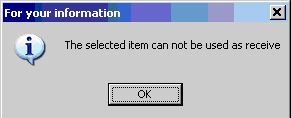

I thought all the highlighted things may be further filters on the events to give us more control over the data but when I tried to select any of them it threw up this odd little message box saying “The selected item can not be used as a receive”.

I think that’s rather odd. What’s the point of showing them if they cant be used at all? Maybe that’s for the SEND side? I’ll need to take a look but if anyone has some insight on that and would like to share it, please let me know.

I think that’s rather odd. What’s the point of showing them if they cant be used at all? Maybe that’s for the SEND side? I’ll need to take a look but if anyone has some insight on that and would like to share it, please let me know.

Until next time.. happy Biztalking !!

The Adapter Diaries – Oracle Adapter – 1

Well, after a quite a few months of mainly coaching and mentoring, i finally got permission to get my hands dirty with code for a new project, where it appears, that yours truly is going to be a one man army. Its quite an interesting project involving getting data from an oracle system, content enriching from some Java webservices, transformation and posting to one of those loosey goosey webservices that have no strong typing at all.

I will have quite a lot to say about the webservices soon and hopefully some interesting things to share on patterns and architecture choices, but for now i’m going to focus on the Oracle OBDC adapter (not the newfangled WCF one). I was quite excited to finally get to code again and to be able to work on an adapter that I had heard so much about but never had a chance to work with earlier.

There’s some pretty good material about the Biztalk 2006 Oracle LOB adapter and I have included some good reference articles and blog posts about this adapter, but i wanted to post my experiences with getting started with the adapter because IMO, while all the articles are good, there is nothing that takes you all the way from start to finish and there are some important things along the way that are missed out from them. Some of the stuff may be obvious to a few folk but I’m one of those who would like the whole picture laid out before me, so maybe I can do justice to that topic if you are looking for something similar. It is my intention to provide some of the missing pieces and not to replace some of the brilliant stuff out there.

A word of caution first up. When starting out with this Oracle adapter “Go Slow! Go Very Slow”. That applies to reviewing the material as well as slow when clicking through the options in the setup and configuration process.

Acquiring the adapter:

I found it surprisingly difficult to actually get my hands on the adapter. It doesnt come with the default install and all the google searches turned up various articles and posts including those about the new WCF ones but nothing as to the location. I finally found this CodeProject article on the Biztalk Oracle Adapter Installation which saved my bacon. I successfully downloaded the adapter pack and installed the Oracle Adapter. (After I had installed the Oracle client. In my case I am working with a 9i installation so although i could have used a 10g or 11g client to connect to the 9i server, I didnt want to take any chances and plumped for the 9i client).

Upgrading the driver

As soon as you install the adapter you must upgrade the Oracle ODBC driver because the one that is supplied with the Oracle client install is very old. (It is also worth noting that the adapter uses the Oracle driver and not the Microsoft Oracle driver). Now, most of the talk on the web, including the aforementioned CodeProject article indicates that you need to upgrade to the driver version 9.2.0.5.4 and mention an exe named ORA92054.exe. The CodeProject article also provides a link to get this but that link is broken. I couldnt find that version anywhere for a while so when i went to the Oracle site, i found that the latest version of the driver is 9.2.0.8 for 9i clients (I eventually found an archive on Oracle Technet with 9.2.0.5.4 reportedly available there, but that came too late as i had got into 9.2.0.8 by then). Of course if you have 10g or 11g client then the default ODBC driver supersedes even this and according to some MSDN forum posts, works just fine. Anyway, after upgrading to 9.2.0.8, this is what you see

Installing the adapter into Biztalk

This is fairly straightforward step and the MS official doc referenced below as well as the CodeProject article tell you about it. No hangups there.

Simple Scenario – Dumping a table to a file

This was my first foray into using the adapter. It follows the theme of Richard Seroters first scenario (Wiretap) in his walkthrough (referenced below), but as I mentioned earlier, there’ some basic stuff involved that Richard hasn’t covered.

Setting up the receive locations

First i created a new one way receive port and a new one way receive location using the Oracle transport.

Since I only wanted to pump out data from the table to a file when choosing the pipelines I used the pass through transmit because there was no specific document to watch for.

Gotchas in transport configuration

Note: I don’t have any probs showing you the actual names of credentials etc because this was all done on a development box that no one can hack into and besides , I find it easier to follow things when I can trace actual settings across screens whereas on some posts this info is left out which could make it difficult to follow for some readers (like myself :-)). Take a look at the Transport Properties box below and i will talk about some of the entries.

The first thing to note is the password. You cannot just enter the password in the box. You have to click the dropdown and start typing the password. I clicked the dropdown and accidentally let it slip back and an encrypted string appeared as if by magic (in hindsight that was the equivalent of a blank password).

I didn’t realise it at the time but after setting the other values and going to the Managing Events entry, I got a weird error shown in the following picture.

The next thing to note is the PATH . In some blog entries it shows “c:\oracle\ora92” . My equivalent is D drive so I set it as “d:\oracle\ora92” but that wasn’t enough and I had to include the BIN folder thanks to a prompting from this article. It may seem obvious to some, but it wasn’t to me.

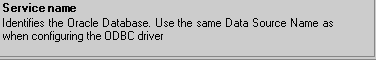

Next, set the Service name. Now take a close look at the hint in the properties window. It says

That’s what you have to watch out for. I first set it to PILOT which is the service name in TNSNAMES and in Enterprise Manager, but I had set the DSN to OraclePILOT as shown below

So this threw me for a while. I could have set the DSN name to be the same as the Service name but I guess that wouldn’t have shown me what I was missing in not looking at the hints.

Anyway, after setting the SERVICE name – and setting the user name I was then able to set up the POLL statement. As you can see in the transport properties screenshot I was just referencing the good old SCOTT schema. Then on clicking the Manage Events browse button, it threw that weird error again. To get out of this I needed to click OK on the Transport Properties (after entering the credentials and service name) and then go back into the properties in order to get into the Manage Events window. Again, it may be obvious to some but not to me. Why couldn’t it save the configuration automatically when Manage Events was clicked? After all if the credentials etc are wrong its simple enough to throw the appropriate error message. (Btw, I later found more MSDN documentation regarding setting the transport properties (the link is in the references below)and it clearly says click OK after setting the user name, so i guess the principle of RTFM applies to this).

Another important point, set the Polling statement AFTER you finish with the Managing Events because you have to choose whether you want a Table Change Event (in which case Polling and Post-Polling dont apply) or whether you want NativeSQL (for which polling and post-polling must be specified). Again, i think the sequence of entries on the dialog box leaves much to be desired. It is definitely not intuitive.

Ok, so when I saved the config and then went to manage events, it showed me the browsing window but there was nothing under the service name initially (because I had set the service name to PILOT instead of OraclePILOT). Once I changed that, the window showed me the following

So then following along with the article I set the Native SQL event as shown in the following screen. Note that I just highlighted NativeSQL and added it – I didn’t go any further to low level items such as SQLEvent (at this time , I don’t know what they mean, so its best to avoid playing around too much . Maybe later a bit of experimentation will be in order).

(After this i set the Poll statement) Thats all there is to it. The rest involves setting the Biztalk application handler and the pipeline.

So I then created a send port to subscribe to the receive port (using the filter BTS.RecievePortName) and dump the message to a file and sat back.

Initially I had used the XML receive and send pipelines, but that didn’t work. All I got was an error message saying

There was a failure executing the receive pipeline: “Microsoft.BizTalk.DefaultPipelines.XMLReceive, Microsoft.BizTalk.DefaultPipelines, Version=3.0.1.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35” Source: “XML disassembler” Receive Port: “ReceivePort1” URI: “OracleDb://OraclePILOT_7e690853-1ea3-4736-adb7-5134efec6366” Reason: Finding the document specification by message type “http://schemas.microsoft.com/[OracleDb://OraclePILOT/NativeSQL]#SQLEvent” failed. Verify the schema deployed properly.

Thats one of the familiar Biztalk errors. That happens because there wasnt a subscriber for that message type as the send port was only subscribing to the receive port, not the specific message. Once I set them to pass-through on both ends, I got my first successful file dump shown below

So, thats it. Check out Richard Seroters article referenced below for some more of the use cases that he set out to prove. In my next article I’ll talk about the choice of events (TblChange vs SQLEvent) that i had to wrestle with.

Hope you found this helpful. Your feedback would be much appreciated. Here are some of the good reference materials i found.

References

Community

BizTalk Oracle Adapter Installation

Richard Seroter – A walk through the Biztalk 2006 Oracle adapter -This is a brilliant post from top guru Mr.Seroter. Also check out the comments to the post and his replies for some interesting discussions

Richard Seroter – Important Hotfixes for the Oracle adapter

A simple Biztalk 2006 Oracle Adapter demo – Another good one.

Official MS stuff

Installing and configuring Adapters for enterprise applications – also available as a downloadable whitepaper AT this link

Oracle ODBC database adapter – The whole enchilada of the Oracle adapter MSDN docs – definitely worth reading at some point 🙂 usually at the beginning of the dev effort!

Oracle Database Transport Properties Dialog Box (part of the above link but called out here as a special mention)

Microsoft BizTalk Adapter Pack Samples

Oracle

Oracle ODBC Drivers (latest) Main page

ODBC Driver 9.2.0.54 – saw this one too late – after I had installed the latest, but this doesn’t really matter unless you want this version specifically)