Archive for the ‘Uncategorized’ Category

VS Themes Collection (VS10,VS08,VS05)

Just came across this great site with a collection of color schemes for Visual Studio 2010 and older versions too. There are some real beauties there. I quite like the retro look of Turbo-Pascal Revisited (though i doubt i could use it for a long time without drowning in the blue) and have started to use Desert-Ex Revised with a change of font to Consolas 12 point. Vibrant Ink is pretty good but can hurt the eyes with its brightness.

I also found that when you first run VS10, it asks if you want to import current settings from previous versions and then migrates those settings across nicely. I had been running Ragnorak Blue , one of Tomas Restrepo’s settings in VS2008 and that ported very nicely across. This StudioStyles site also has the VS10 version of Ragnorak Blue.

So if you like spicing up your coding experience with different color schemes, this site should have something for you. Enjoy.

The Adapter Diaries – Oracle Adapter – 2

Having successfully started with the Oracle adapter and dumped the entire table out to a file, I decided to look into the mechanisms for getting the data out for the official assignment. I’d like to share what i found. As i mentioned in the previous article, my first forays into the adapter were still in the more simple options and no rocket science involved here, so if you are still interested, read on.

Choosing The Notification Mechanism

I picked the TblChangedEvent first. One of the suppliers tables is a BusinessDocument_Header table where entries are made whenever these documents are created or updated. Further details about the contents of the business document are available in a mass of other tables, but the main notification source is this Header table.

Now going with just the name of the event, I was under the assumption that somehow the adapter would know when a change occurred in the table and would be able to notify me. So, using the same receive port that I previously created, I added another receive location and in the location I set a table changed event for the Header table. For the first time round of course all the rows were output into the file. I didnt mind that. I changed some rows in the table and waited. After the polling interval the same thing happened again – all the rows were dumped, not just the ones i had changed.

At first I was rather disappointed with the outcome but then on thinking about it bit more, it all seems perfectly logical.In a generic comprehensive adapter, the transmitting system cannot be expected to know what constitutes a change (or more specifically, what is the users definition of a change). Is it new insert ? is it an update to a specific column or set of columns ? This could be possible in a custom adapter written expressly for this purpose but not in a generic one.

Before looking at other options , i looked at the “Delete After Poll” setting for this TblChangeEvent. This works fine. As soon as the table data was returned to me the entire contents were deleted and the next time I added a row it got returned immediately and the contents truncated. However, while interesting to play with on a disposable dev schema , this option is not feasible to apply on the main Header table because that’s a core part of the application and there could be other components subscribing to that data.

The next option I tried was to use NativeSQL with polling and post-polling statements. Since I have a dev version of the database I added another column “HAS POLLED” defined as a CHAR(1) which was set to N by default and used in the Poll statement and the Post-Poll set the column to Y. This works as advertised. It was also interesting to read some details about polling in this MSDN article “Poll, Post Poll, and TableChangeEvent” .

A useful note about the transactional feature in that article is “It is important to note that the execution of the poll statement and post poll statement is performed within a transaction of serializable isolation level. Records or changes that were not visible when executing the poll statement are also not visible when the post poll statement executes. This may reduce throughput on some systems, but it guarantees that the above-mentioned example only affects the data that was sent to BizTalk Server with the poll statement.

The article also goes on to illustrate examples of a poll and post-poll statement for a sample table. I was quite impressed with this because from memory (of a dim and distant time) when i worked with the SQL adapter, we had to roll our own transactional notification using stored procs etc (i didnt get round to using updategrams) to update the columns that had been sent for consumption.

This option works when you have control over the schema of the table (or you are working on your own table) , but in my case, the supplier wont be too happy about me adding columns to their tables, so this option – to poll the base table using a flag attached to it was out of the question.

This left me with just two options

-

A trigger on the main table copying data to a target table which can then be utilized in either fashion (“tblchange with delete after poll” or “poll & post-poll statements”). This is still problematic because the supplier might not like that option as extra triggers could interfere with business logic or adversely affect performance.

-

To leverage timestamp columns and external “poll tables” as Richard Seroter pointed out in the feedback section to his Walkthrough article. (To summarise that : He had another table with just one entry in it – the last poll time and used that as a control element when polling the main table and set this value in the post poll event) This seems a simple enough option and on testing it out it works brilliantly. This has the advantage that the supplier wont mind me adding another table and it wont affect their upgrade scripts etc.

So this “External Poll Control” pattern is the least invasive of my options. At this point I still dont have the need for any stored procs (but it may still be required)

Lessons & Considerations

Some of the things to consider when choosing your notification mechanism are

(1) Schema Control and support: if you dont control your schema obviously your options are limited, but there are creative ways around it (like the external table). Of course, if the system is completely maintained by a thirdparty and the DBA wont let you touch the schema, then this aint gonna work. You may have to resort to the TblChangeEvent to give you the whole data set and then build a custom “changeset” identification mechanism in a database that you control.

(2) Triggers are not always good: Even if you do control the schema, triggers may not be a good thing to use (consider performance and data integrity. Some systems may have more than one trigger on a table and there may be some sequence of execution that could trip you up.Its no use trying to tell them things like “the business logic shouldnt be in the database”. Sometimes it has to be, but even if it doesnt, its there. Live with it !!!

(3) Possible Data Loss: In a heavy load situation, even if you could chuck the entire table at Biztalk (via TblChange) and use a “delete after poll” event, whats the guarantee that the delete statement wont kill off transactions that were added in the little window that stuff was sent to Biztalk ? (ie) if you had 100 notification records and sent them for consumption, before the delete gets fired, maybe 25 more would have been added. Will the delete knock them off also before they can get sent in the next round? Or is this guaranteed not to happen? (If you know the adapter well enough to answer this, pl let me know. I could cook up a test scenario but it would save time if i was given the answer here.

(4) Size of the dataset & complexity of schema: If its possible, go for the minimum data needed to notify and then use a content enrichment step to get the rest of the data through other published interfaces. For example, in our scenario, the business document data is spread out across many tables and a lot of it is contained in generic “name-value” pairs. The SQL to build the whole document would be horrendous – thats assuming we could spare the time to write it all. Fortunately the supplier has provided a webservice that retrieves the business document for us so all we need is to get the doc reference in the notification.

Of course, we have to consider that if we get 1000’s of notifications then calling the webservice that many times is not good either and some throttling would be needed. Its a java based system so i can’t opt to do an inline call as i might attempt if i had a .NET target. This area is still up for grabs and i’m trying to gather some stats on volumes to know if the webservice is still the best choice. I’ll keep you posted.

If there are other things that need to be considered and you’d like to contribute to this list, please let me know.

Whats next?

Im currently still investigating how much work is involved in building the “minimum data set” that will allow me to peacefully content enrich by using the web service. I dont want a flood of notifications about business docs i’m not interested in so there is some data available that will allow me to filter the types of documents. I also dont want to hardcode that filter into the code so theres some work to be done in the area of parameterising the filter and making it possible for the system to be extended to get more documents in future without rewriting the code.

I’ve talked a little bit about the Content Enricher already. I was intending that to be my next post, but it seemed better to put it all here. Theres more to say on that subject so stay tuned. Depending on the availability of time I’m going to put more R&D into the Oracle adapter. The External Poll route seems sufficient for now, but i just have this feeling that it wont be as easy as this.

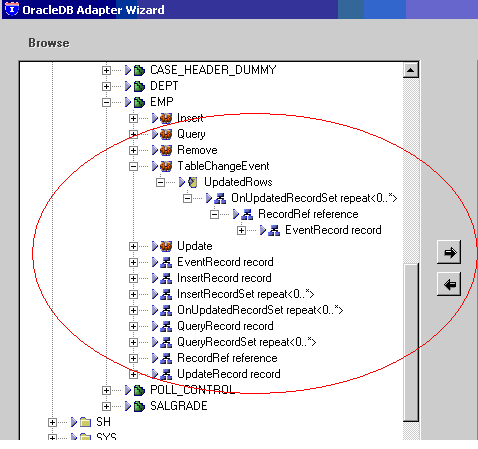

There seems to be a wealth of options in the Oracle adapter there and I don’t think even the MSDN documentation goes into all of them. Look at the following screenshot when you browse through the schema (on clicking Manage Events)

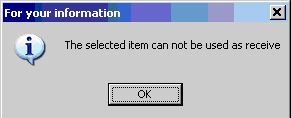

I thought all the highlighted things may be further filters on the events to give us more control over the data but when I tried to select any of them it threw up this odd little message box saying “The selected item can not be used as a receive”.

I think that’s rather odd. What’s the point of showing them if they cant be used at all? Maybe that’s for the SEND side? I’ll need to take a look but if anyone has some insight on that and would like to share it, please let me know.

I think that’s rather odd. What’s the point of showing them if they cant be used at all? Maybe that’s for the SEND side? I’ll need to take a look but if anyone has some insight on that and would like to share it, please let me know.

Until next time.. happy Biztalking !!

SOA – Adapters and Kramer vs. Kramer

I’ve been involved in an interesting scenario recently which has given me enough fodder for a number of posts. In the first phase of a project recently completed, we were tasked with adding functionality to an existing app by combining it with data from other sources and providing access to our CRM system as well as some pages on the website. Now the existing app is a classic ASP system with some simple webpages wrapping a multitude of stored procedures.

Deciding on the integration layer was easy. Since we’ve been building up a Biztalk powered SOA over the past 2 years, it took the shape of orchestrations exposed as webservices. We havent been down the route of publishing schemas as web services yet and i’ll explain why in a later post. The crux of the issue then was what the back-end of the service looked like. How do we connect to the LOB application?. In some cases, the adapter is a given because the third party application has a particular interface but what do you do when there is more than option?

We were wary of connecting directly to the database and first considered using something like the Service Factory to wrap the database in a simple Entity service just providing CRUD functionality. On closer examination though it seemed that all the logic was inside the SP’s anyway so adding a business layer and a SOAP interface on top of that was just an unnecessary overhead.

So we decided to go down the route of the SQL Adapter. My reasoning at that point was “its just one type of adapter vs the other” (hence the title of the post) and as long as the interface had the right granularity it didnt matter. To cut a long story short, we ran into a number of issues because as we found out during implementation, the interfaces didnt really have the granularity we needed. For instance , instead of being able to call just one SP to do a certain operation we would need to call 2 or 3 and the flow between the 2 or 3 SPs was controlled by the ASP front end. Recreating the logic inside an orchestration was too much work (and for several reasons we didnt want to take out core business logic away from the application itself) , so we had to write more wrapper SPs. On top of that we also had to change the schema of the DB in some places and enhance existing SPs’ and so on. We couldnt make wholesale changes either to avoid breaking the existing front end. In short , the integration layer was directly exposed to all the vagaries of database development. We got there in the end, but not without considerable loss of hair and sleep.

I was a kid when Kramer vs. Kramer was released and i dont remember how the movie ended. I do know however, that at the end of this, we definitely preferred one Kramer (the SOAP adapter over our WS interface that never got built) over the other Kramer (SQL Adapter).

To be fair, it really wasnt the fault of the adapter anyway. If the SP’s had the right granularity and had been layered in a way that avoided the orchestration having to know too much about the database schema, then the SQL interface would have been just as good.

You might ask why bother with the SOAP adapter at all. Here are some of my reasons

- Adapters vs Inline calls: I dont want to call the business layer directly from the orchestrations via expression shapes. I want the reliability that adapters give me.

- Ease of development: We needed something easy to develop. The team hasnt been exposed to writing custom Biztalk adapters so far and with the time pressure it didnt seem appropriate to try that route particularly since it seems there are so many options involved in writing custom adapters and there definitely wasnt enough time to evaluate them. Also, if we then ran into trouble when deploying them, it would be a bit of a nightmare.

- Its just a facade anyway: Since all the logic would have been kept in the BLL (and some parts of the DAL), the ASMX is only a facade as would be the BTS adapter so why create unncessary fuss just for a measly interface!! .

- Its a long way to WCF: If we were in the R2 world we could have written a WCF adapter over the business layer directly and opted for a non SOAP transport, but we are still in the “old” v2006 and WCF is very far away.

- Non WS interfaces could be a limiting factor: If the opportunity arises in future to productize this, then the biztalk adapter wouldnt have any value unless the customer had biztalk. If they required a web service interface then the ASMX would have been just the ticket (also because WCF isnt on our radar now).

I wouldnt consider myself a guru anyway so perhaps there are even deeper technical reasons why one adapter could be better than another for a given scenario, but the above factors contributed to my stand.

Interestingly, for our next project, we are now tasked with adding a big block of functionality to the old application and exposing that to the integration layer. We have also been asked to overhaul the old application to make it more integration friendly. You can guess which route I’m going down now.

In my next post I’ll tell you about another debate that came up for the new projects.

I’d like to hear from you on how you choose adapters when you have different options and are free to make your own decisions. (Dont rub salt by telling me how wonderful WCF is and how the LOB adapter SDK could make my life easier. I know all that and living in hope that i’ll get to play with those toys sometime soon, but for now i gotta make do with what I have).

Orcas Team Architect and Biztalk

I have always been fascinated by the VSTS Team Architect and the potential the design surfaces have. I kinda looked on from the sidelines at VS2005 from TechEd 2004 onwards cos I was still stuck in VS2003 land and actually that remained the case till the middle of this year. So although I installed the stuff on VPC’s and tried playing around with things I never got very far, but I did read enough TechNotes and followed along with the newsgroups to gather that while the Application Designer promised a lot, it probably didn’t go far enough or at least, the extension and customization wasn’t for the faint hearted.

I was also disappointed with the lack of support for Biztalk. Sure, there is a prototype for an external Biztalk webservice, but thats ok if you are an ordinary app trying to connect to a Biztalk service. Actually, that also raises an important question. Why have a prototype for a “Biztalk” web service as though Biztalk web services are any different from ordinary services? What does it matter whats behind the webservice? If you are a Biztalk developer, then a prototype which helps you implement that web service makes sense because you may choose to publish a schema as a web service or a orchestration, but from the consumer standpoint, its all the same but the prototype doesnt do anything for the BTS developer (If anyone knows the rationale behind that prototype, drop me a line. I’d like to know what they were thinking of when they designed it). Since there wasn’t much Biztalk there, I left it without going any further. However automating Biztalk development and deployment is my idea of development nirvana so I have been looking at various options and i have written some posts on my earlier DNJ blog about these topics.

But, back to the present, I’ve been reading up on the future of VSTS and the features in Orcas, Rosario, Hawaii etc (all code names for the next releases of VS and VSTS). I came across the VS Team Architect blog and I was particularly taken with the features such as Top Down System Design and the Conform to WSDL. The enhancements to Importing & Exporting Custom Prototypes also looks like a great addition. I dashed off a note to the Team Architect team (and also one to Marty Waz) to quiz them on the level of support for Biztalk and what plans they have in that area. I also downloaded Beta 2 and will get cracking on investigating these features as soon as possible.

I think that Biztalk could do with more GUI’s and wizards. This will definitely make it more easy to use and lower the steep learning curve the product currently has. When you are running a team with vastly different skill levels, you cant have enough of automation. No amount of documenting your “standard patterns” helps when you are trying to get everyone at a common level and meet tight deadlines. Automation is definitely no silver bullet, but its a huge bonus for the harried architect.

I have grumbled many times in the past that the Solution Designer promised for the “vNext” never materialized and there’s absolutely no info on whether thats completely dead or if we can expect something in the next version (perhaps in 2010)? I dislike the lack of support in BTS for contract first development or to be more specific WSDL first development. A lot of the implementations I do now require a WSDL to be defined first with the third parties and then for development to proceed on that basis. But there is no out of the box feature for Biztalk to do this. Aaron Skonnard wrote an article in MSDN magazine a long time ago about contract first development, but the options to get Biztalk to conform to a WSDL are messy to say the least. There’s a BPEL import wizard but no WSDL import wizard. Why? Surely more developers care about WSDL than about BPEL!! I know they position the product as fully supporting BPEL4WS + some extensions and so the BPEL import will score some points there, but why not a WSDL import?

Its this area where I think that some integration between Biztalk and the Orcas Team Architect would be very powerful. I think it would be liked a dream come true to be able to feed a WSDL into a wizard and choose if we want a schema based web service or orchestration based web service and have it generate the orchestration. Of course it cant generate the “business logic” of the orchestration but it could generate the Facade which links to the ASMX or SVC interface and contains the correct handling of incoming messages, validations, returning of SOAP faults etc. The “inner” orchestrations can be hand coded or a stub could be created where the facade either calls the inner orchestration directly or through the message box or any other mechanism.

The TopDown system design would also be a great feature and the prototype import/export could easily link in with Jon Flanders Pattern Wizards tool (with a prototype encapsulating a project template or one of the pattern items).

We could go even further. How about an adapter factory that can generate WCF based adapters or a regular one? At this point currently we have an adapter framework & some wizards, but then there are also some adapter base classes to use (and there’s some pretty detailed guidance in a whitepaper on writing transactional adapters) and we have to plow through numerous options and figure out the correct interfaces to implement. After that we have to consider how to link in with SSO and all that. This area is ripe for a guidance package.

Also consider the amount of guidance in the end to end scenarios. Getting them all up and running and wading through the docs and code is not a trivial effort, but making all that guidance executable would be such a bonus.

I think a Biztalk solution software factory makes a lot of sense and when you look at the kinds of stuff coming out from P&P (the service factory, the new modeling edition of the service factory), a Biztalk offering would be really cool and would be able to leverage the advances in GAT, DSLs etc. Maybe a community effort to build something like this would be worth exploring. Biztalk, having such a wide range of applications, would probably need more than one factory (SOA, BPM, CBR etc + some horizontal facilities- adapters, pipelines, deployment etc) and a factory to build solutions on top of the ESB toolkit would also be great. These kind of things would take Biztalk usability far beyond its competitors.

What do you think? would this be worth starting up as a community effort? let me know. I came across this post from someone who is working on something similar and have pinged him to enquire if thats a private effort or something intended to be bigger. Lets see what comes up.

Blogging Again

So its been a long absence from the blog world. Havent had much to write from a Biztalk perspective as i was only involved in the analysis and design phase for a new project that is now underway. But the other reason, definitely more important, is my change in status. Im now the proud dad of an absolutely beautiful baby girl. Her name is Anna Abigail Rebecca.

Take a look at that picture of happiness and tell me if there could be anything more important than spending time with her.? I’ve heard it said many times and in many places that parenthood changes your perspective and that has definitely happened to me. So i will try and blog useful stuff but there could be long gaps between them. However, I have saved up quite a lot to write about so dont worry. I’ve got plenty for the short term.

more soon.

Hello again, Tech World!

Welcome to my new Biztalk and .NET blog. I have moved residence to WordPress from my old DotNetJunkies site as I was getting fed up with the inability to use IE7 and the poor functionality of the rich text box control there when using FireFox. Hopefully WordPress wont have these issues and i have heard lots of good things about the tool. So here I am. I will try and move content from my old blog here if possible. Otherwise I’ll leave that as an archive.